Stereo DSO: Large-Scale Direct Sparse Visual Odometry with Stereo Cameras

Abstract

Stereo DSO is a novel method for highly accurate real-time visual odometry estimation of large-scale environments from stereo cameras. It jointly optimizes for all the model parameters within the active window, including the intrinsic/extrinsic camera parameters of all keyframes and the depth values of all selected pixels. In particular, it integrates constraints from static stereo into the bundle adjustment pipeline of temporal multi-view stereo. Real-time optimization is realized by sampling pixels uniformly from image regions with sufficient intensity gradient. Fixed-baseline stereo resolves scale drift. It also reduces the sensitivities to large optical flow and to rolling shutter effect which are known shortcomings of direct image alignment methods. Quantitative evaluation demonstrates that the proposed Stereo DSO outperforms existing state-of-the-art visual odometry methods both in terms of tracking accuracy and robustness. Moreover, our method delivers a more precise metric 3D reconstruction than previous dense/semi-dense direct approaches while providing a higher reconstruction density than feature-based methods.

Citation

If you find our work useful in your research, please consider citing:

@inproceedings{wang2017stereoDSO,

title={Stereo DSO: Large-Scale Direct Sparse Visual Odometry with Stereo Cameras},

author={R. Wang and M. Schw\"orer and D. Cremers},

booktitle={International Conference on Computer Vision (ICCV)},

year={2017},

month={October},

address={Venice, Italy}

}

Download

- The estimated camera trajectories of all the sequences of KITTI Odometry: training (00-10), testing (11-21).

- Paper: Paper, supplementary document of the paper: Supplementary Document.

Results

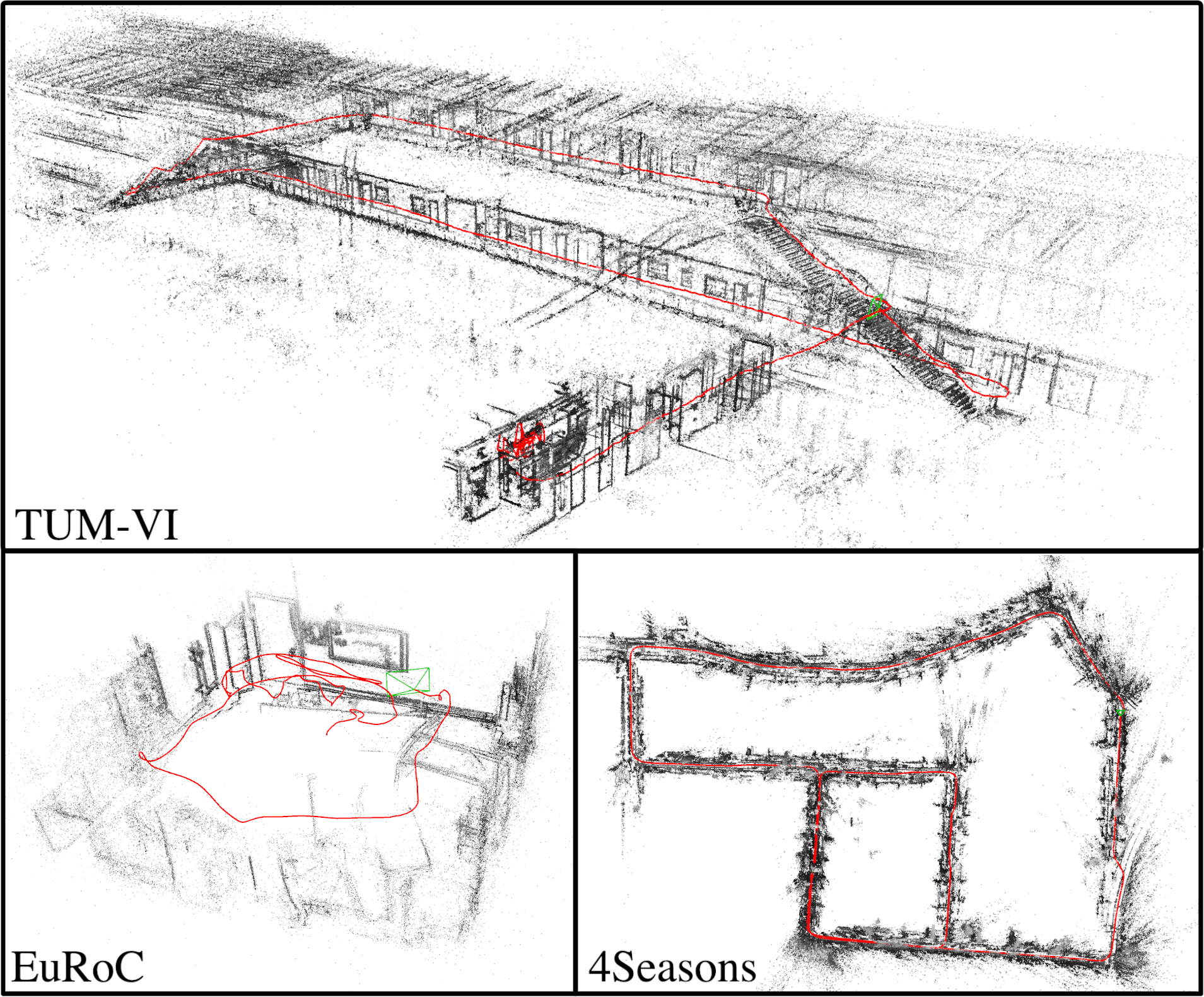

For this work we use the KITTI Visual Odometry Benchmark and the Frankfurt sequence of the Cityscapes Dataset for evaluations. The full evaluation results can be found in the supplementary material of our ICCV 2017 paper. Below we show some representative results.

KITTI Visual Odometry Benchmark

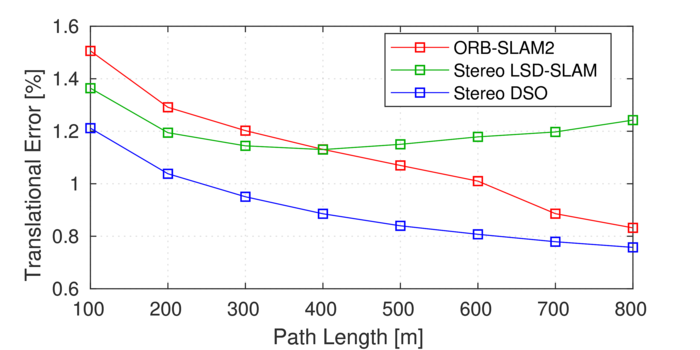

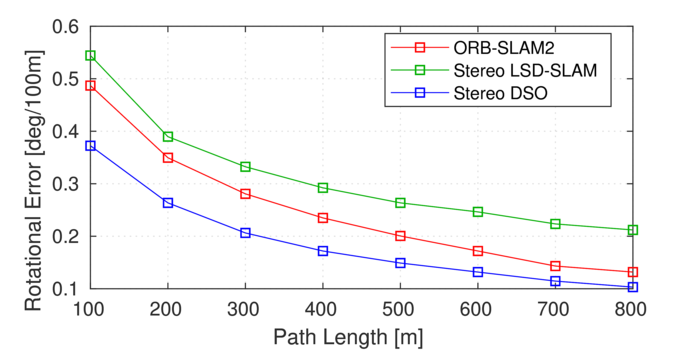

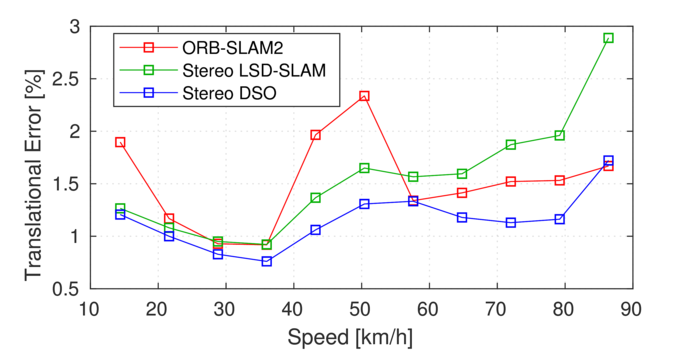

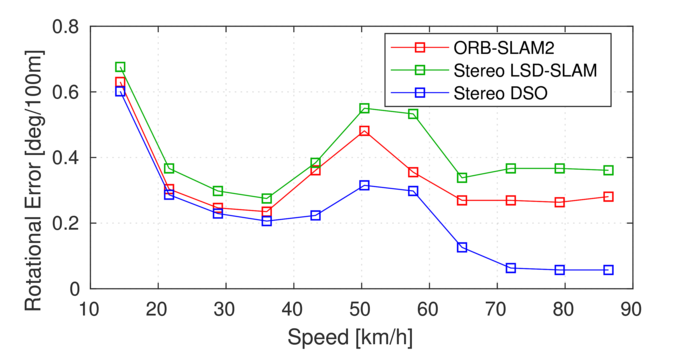

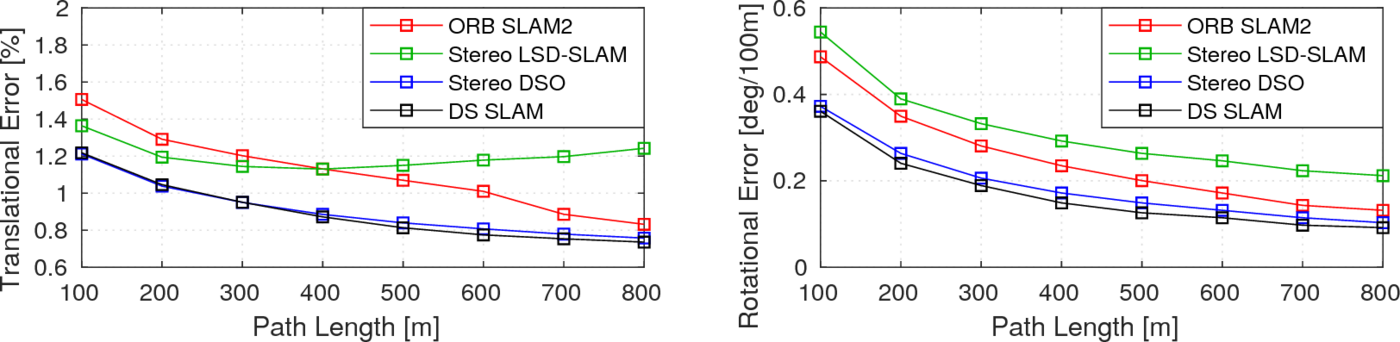

The following 4 figures show the average translational and rotational errors with respect to driving intervals (first row) and driving speed (second row) on the KITTI VO testing set. We compare our method with the current state-of-the-art direct and feature-based methods, namely the Stereo LSD-SLAM and ORB-SLAM2. Note that both of the compared methods are SLAM systems with loop closure based on pose graph optimization (ORB-SLAM2 also with global bundle adjustment), while ours is pure visual odometry.

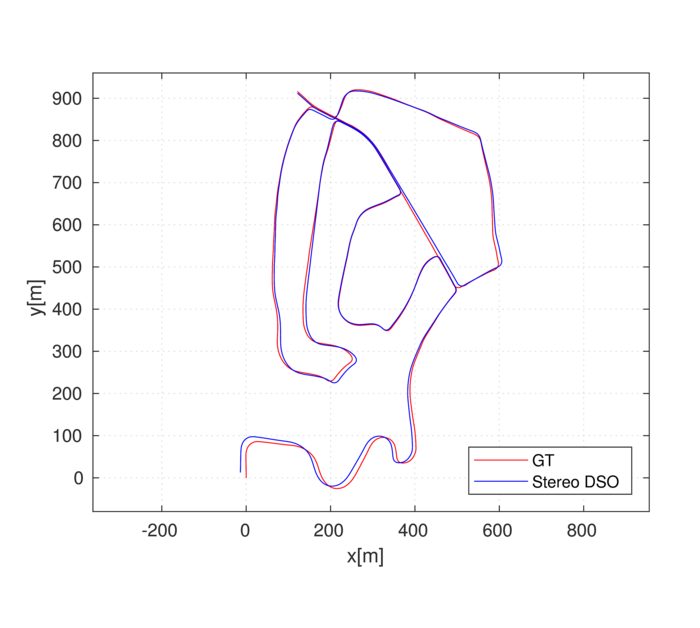

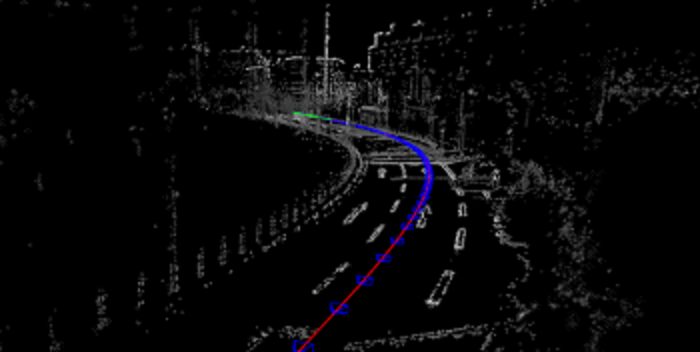

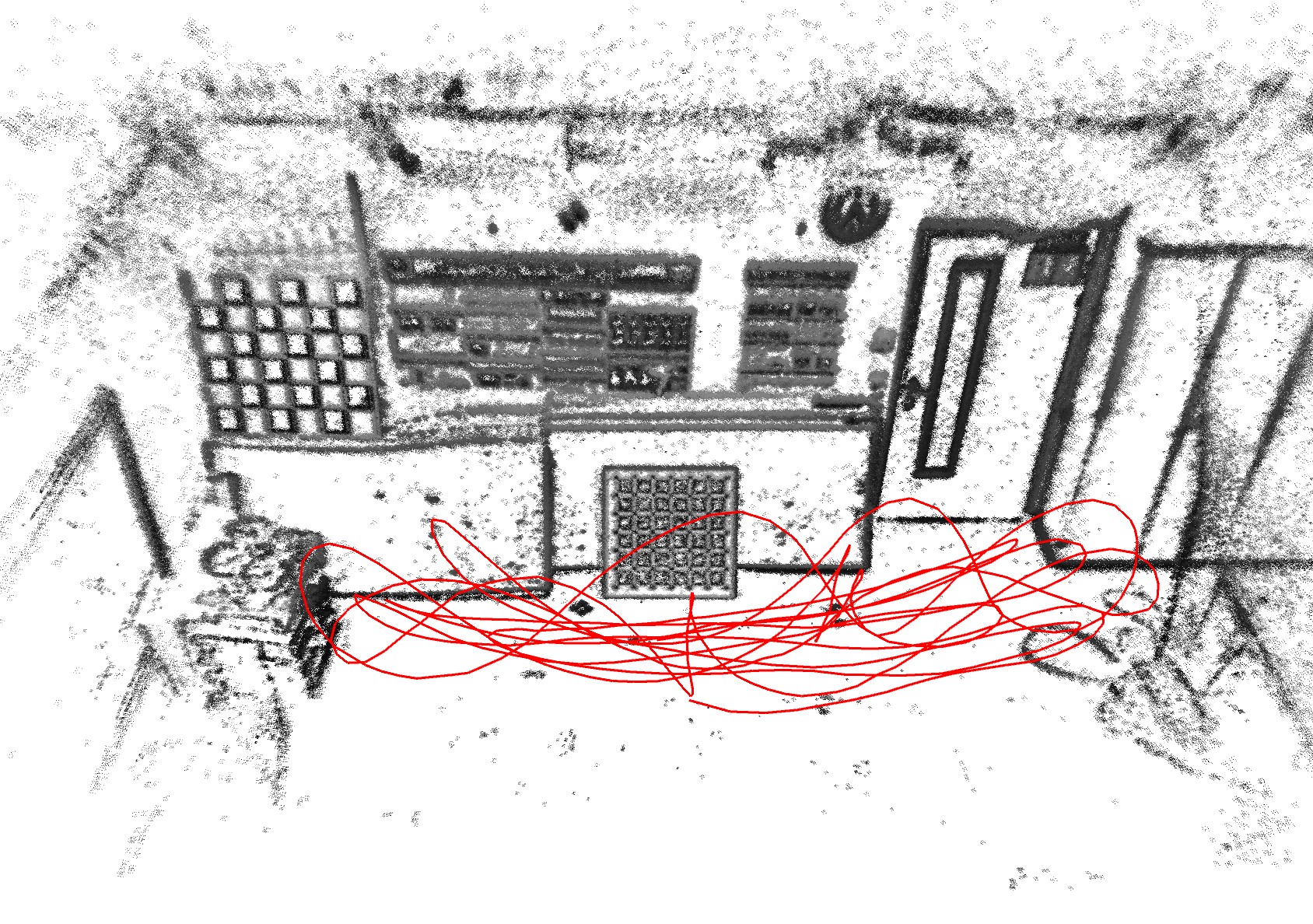

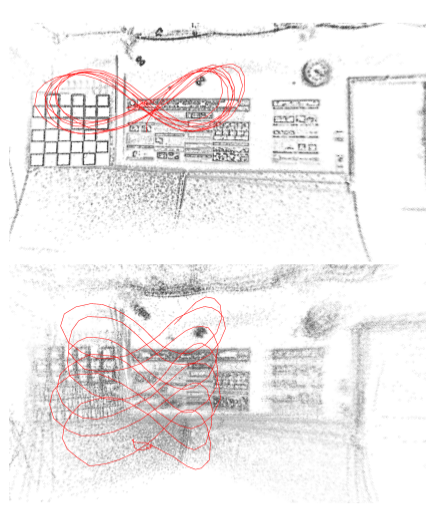

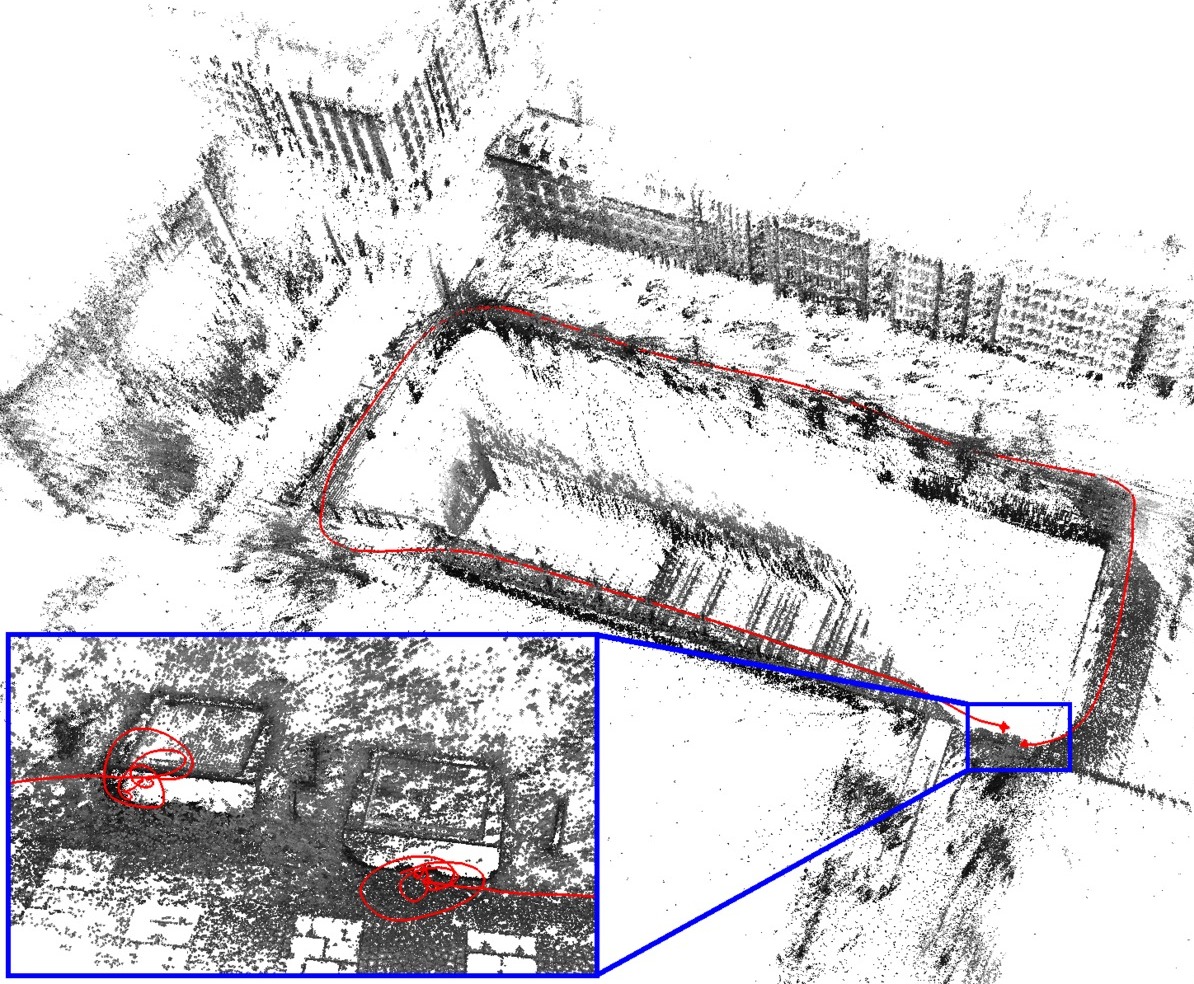

As qualitative results we run our method on all the sequences from the training set and compare the estimated camera trajectories to the provided ground truth. Following are the results on some example sequences. All the estimated camera trajectories can be downloaded here Camera Trajectories.

Update July 2017: After the ICCV 2017 deadline, we extended our method to a SLAM system with additional components for map maintenance, loop detection and loop closure. Our performance on KITTI is further boosted a little, as shown with black plot below. A demonstration video is shown above.

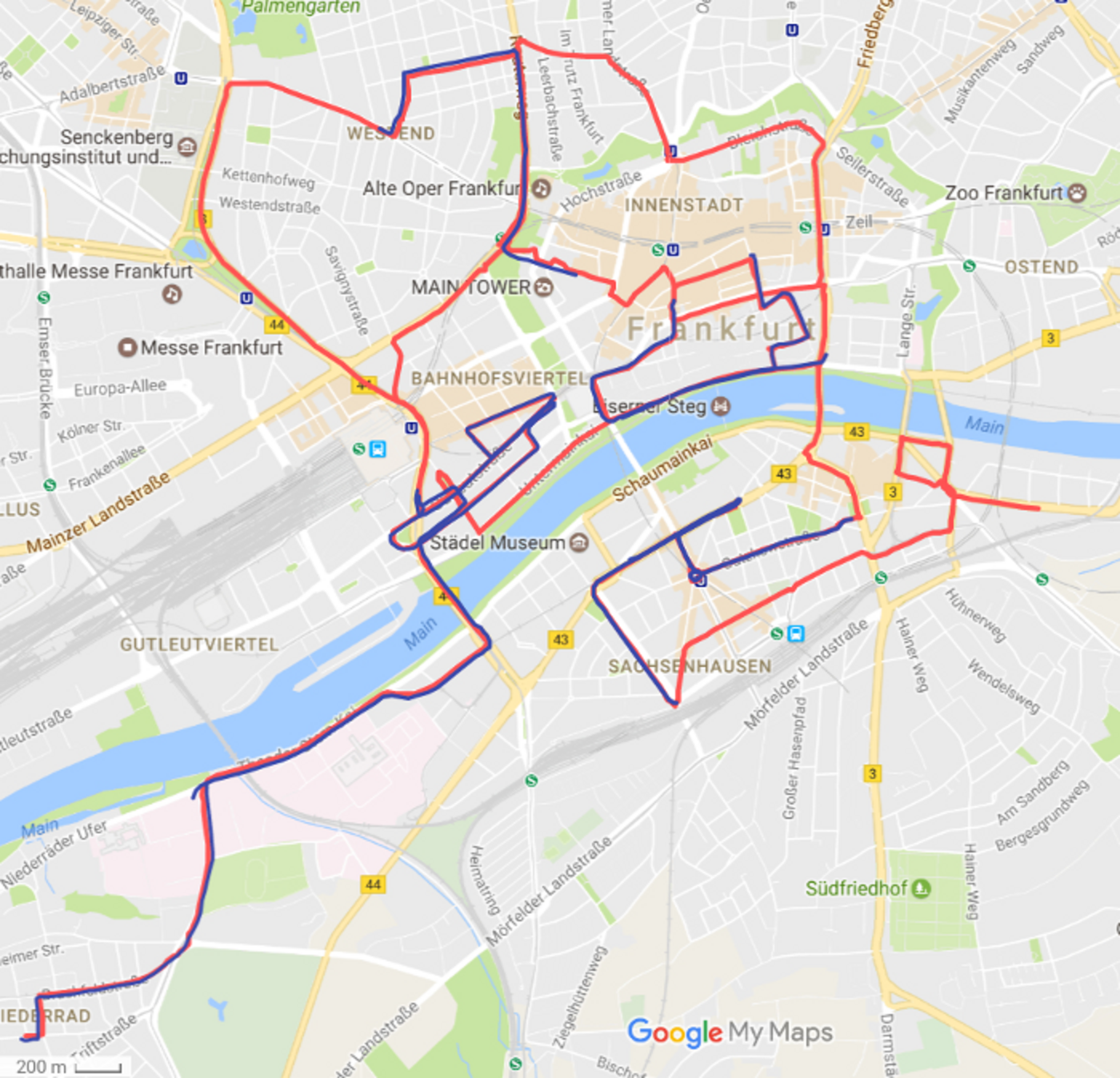

Frankfurt Sequence of Cityscapes

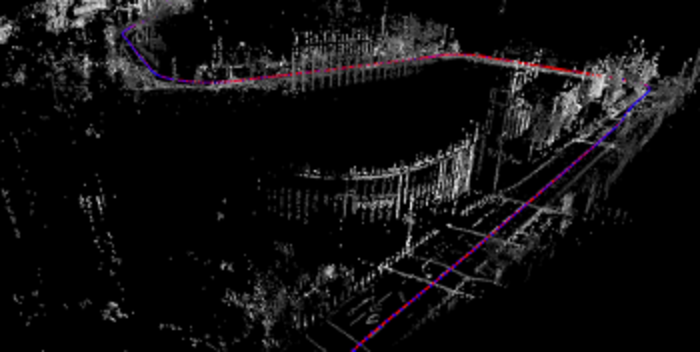

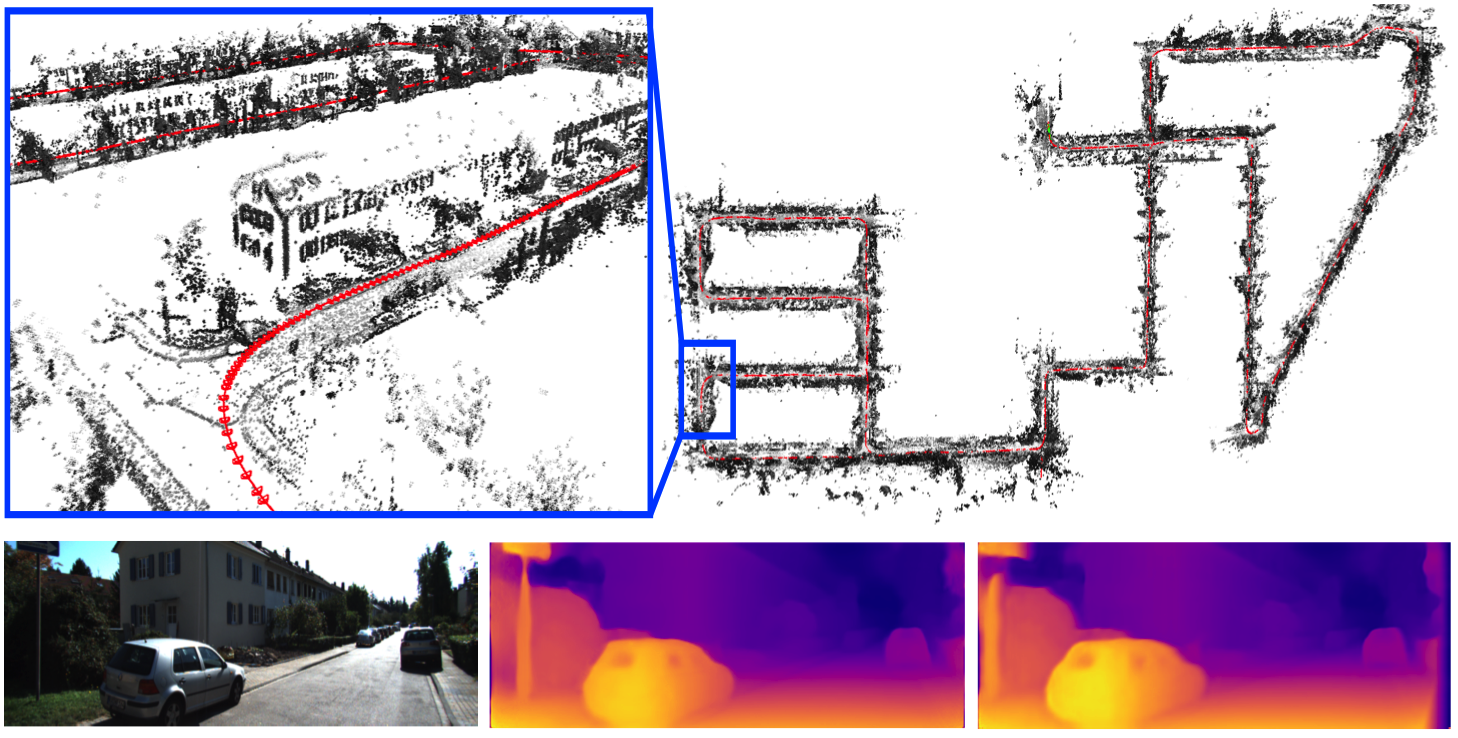

To verify that our method can work with industrial level cameras (high dynamic range, rolling shutter with high pixel read-out speed), we evaluate our method on the Frankfurt sequence from the Cityscapes dataset. We split the sequence to several smaller segments, each with a comparable scale to those sequences from KITTI. The estimated camera trajectories with their alignments to the GPS trajectory are shown below (blue: estimates, red: GPS). Note that the provide GPS coordinates are not accurate.

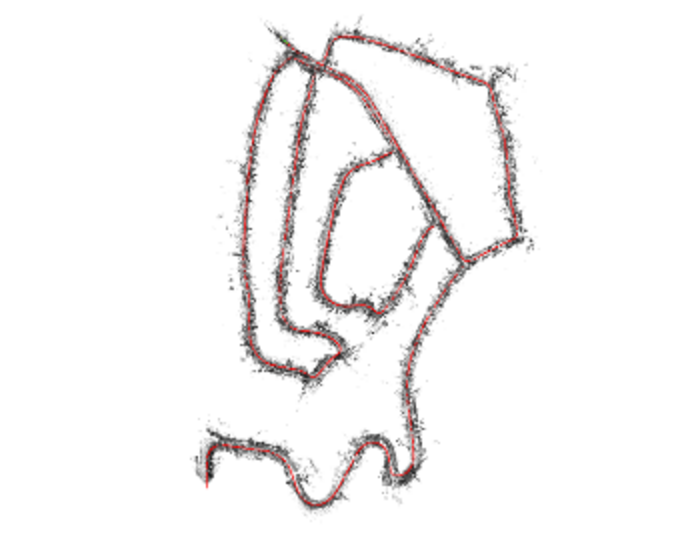

Some qualitative results on the 3D reconstruction are shown below.

Publications

Export as PDF, XML, TEX or BIB

Journal Articles

2022

[]

DM-VIO: Delayed Marginalization Visual-Inertial Odometry , In IEEE Robotics and Automation Letters (RA-L) & International Conference on Robotics and Automation (ICRA), volume 7, 2022. ([arXiv][video][project page][supplementary][code])

2018

[]

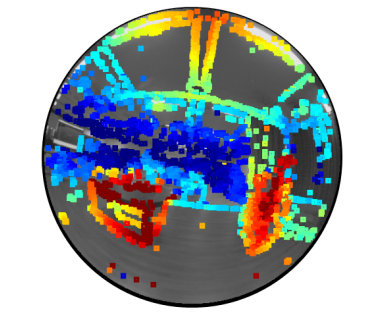

Omnidirectional DSO: Direct Sparse Odometry with Fisheye Cameras , In IEEE Robotics and Automation Letters & Int. Conference on Intelligent Robots and Systems (IROS), IEEE, 2018. ([arxiv])

[]

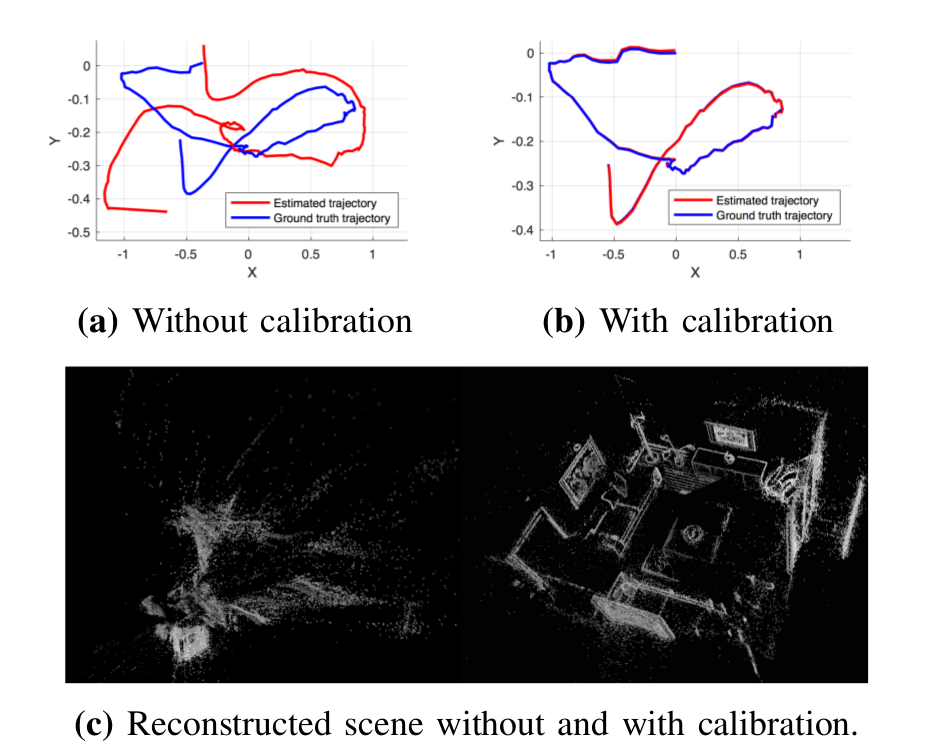

Online Photometric Calibration of Auto Exposure Video for Realtime Visual Odometry and SLAM , In IEEE Robotics and Automation Letters (RA-L), volume 3, 2018. (This paper was also selected by ICRA'18 for presentation at the conference.[arxiv][video][code][project])

ICRA'18 Best Vision Paper Award - Finalist []

Direct Sparse Odometry , In IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018.

Conference and Workshop Papers

2021

[]

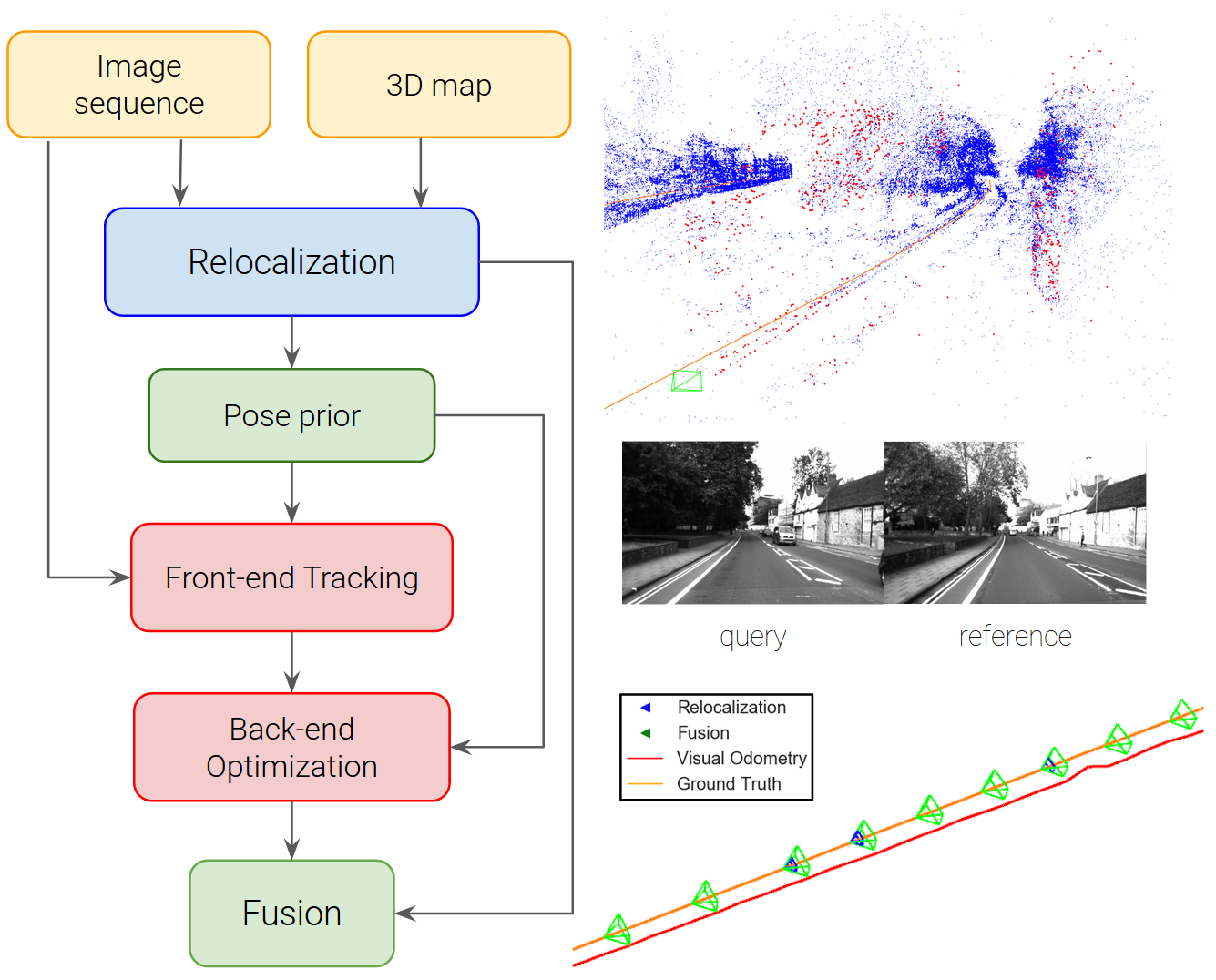

Tight Integration of Feature-based Relocalization in Monocular Direct Visual Odometry , In Proc. of the IEEE International Conference on Robotics and Automation (ICRA), 2021. ([project page])

2020

[]

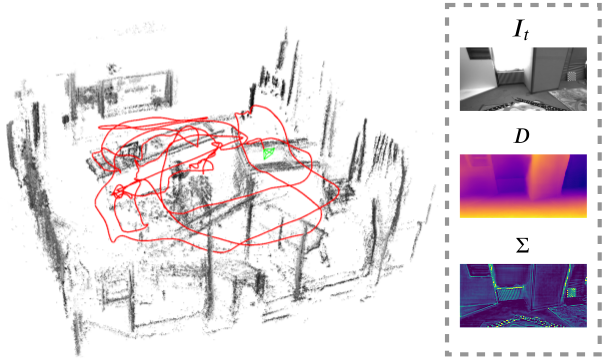

D3VO: Deep Depth, Deep Pose and Deep Uncertainty for Monocular Visual Odometry , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

Oral Presentation

2019

[]

Rolling-Shutter Modelling for Visual-Inertial Odometry , In International Conference on Intelligent Robots and Systems (IROS), 2019. ([arxiv])

2018

[]

Direct Sparse Odometry With Rolling Shutter , In European Conference on Computer Vision (ECCV), 2018. ([supplementary][arxiv])

Oral Presentation []

Deep Virtual Stereo Odometry: Leveraging Deep Depth Prediction for Monocular Direct Sparse Odometry , In European Conference on Computer Vision (ECCV), 2018. ([arxiv],[supplementary],[project])

Oral Presentation []

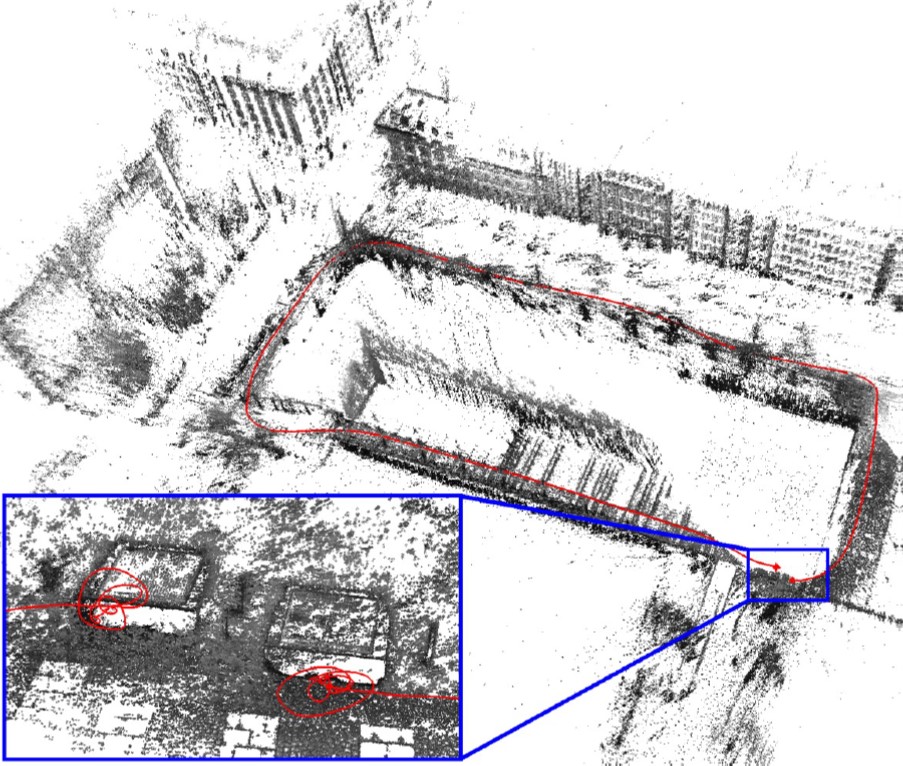

LDSO: Direct Sparse Odometry with Loop Closure , In International Conference on Intelligent Robots and Systems (IROS), 2018. ([arxiv][video][code][project])

[]

Direct Sparse Visual-Inertial Odometry using Dynamic Marginalization , In International Conference on Robotics and Automation (ICRA), 2018. ([supplementary][video][arxiv])

2017

[]

Stereo DSO: Large-Scale Direct Sparse Visual Odometry with Stereo Cameras , In International Conference on Computer Vision (ICCV), 2017. ([supplementary][video][arxiv][project])

2016

[]

Direct Sparse Odometry , In arXiv:1607.02565, 2016.

[]

A Photometrically Calibrated Benchmark For Monocular Visual Odometry , In arXiv:1607.02555, 2016.