Marker-less Motion Capture

In this project, we develop statistical and energy minimization methods to tracking articulated 3D objects from multiple camera views. Such techniques are of central importance in particular for markerless motion capture. The human motion sequence extracted from multiple videos can subsequently be used to animate virtual characters as commonly done in action movies.

Related publications

Export as PDF, XML, TEX or BIB

Book Chapters

2007

[]

Contours, optic flow, and prior knowledge: cues for capturing 3D human motion in videos , Chapter in Human Motion - Understanding, Modeling, Capture, and Animation, Springer, 2007.

Journal Articles

2015

[]

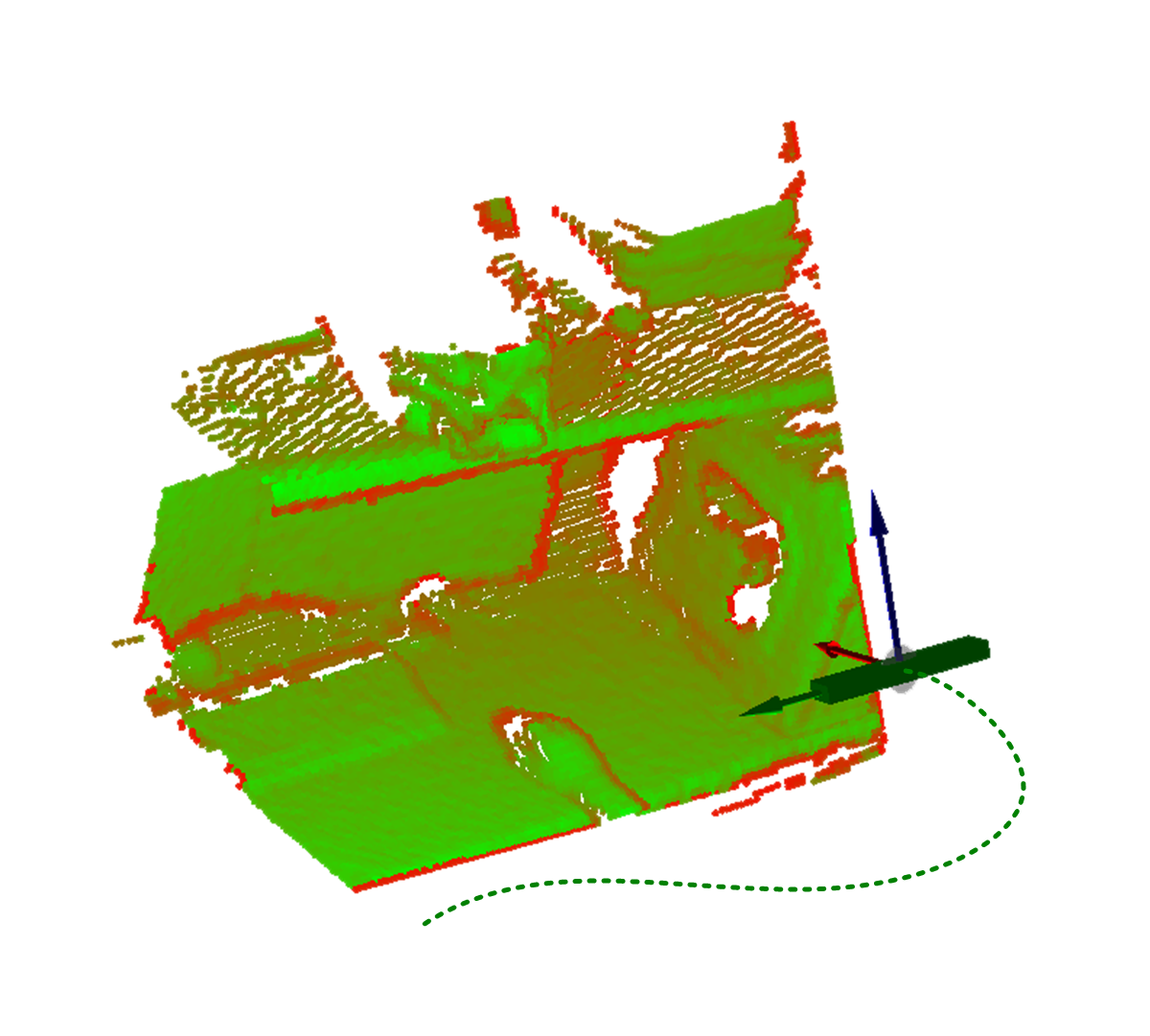

Fast Visual Odometry for 3-D Range Sensors , In IEEE Transactions on Robotics, volume 31, 2015. ([video])

2009

[]

Combined region- and motion-based 3D tracking of rigid and articulated objects , In IEEE Transactions on Pattern Analysis and Machine Intelligence, volume 32, 2009.

2007

[] Three-dimensional shape knowledge for joint image segmentation and pose tracking , In International Journal of Computer Vision, volume 73, 2007. (available online)

Preprints

2021

[]

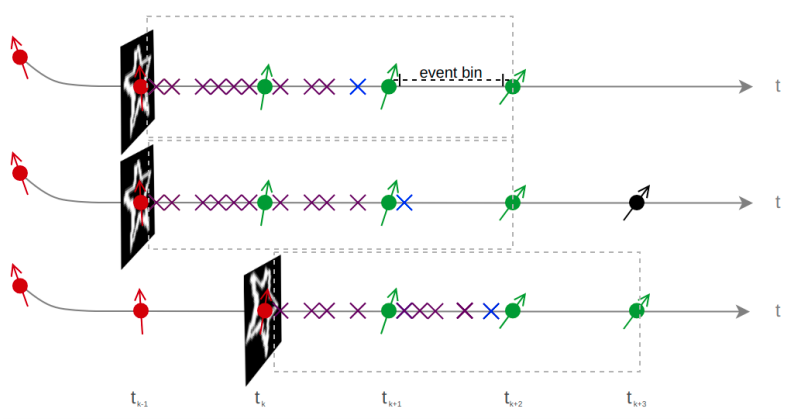

Event-Based Feature Tracking in Continuous Time with Sliding Window Optimization , In arXiv preprint, 2021.

Conference and Workshop Papers

2022

[] ![]()

DirectTracker: 3D Multi-Object Tracking Using Direct Image Alignment and Photometric Bundle Adjustment , In International Conference on Intelligent Robots and Systems (IROS), 2022. ([project page])

2017

[]

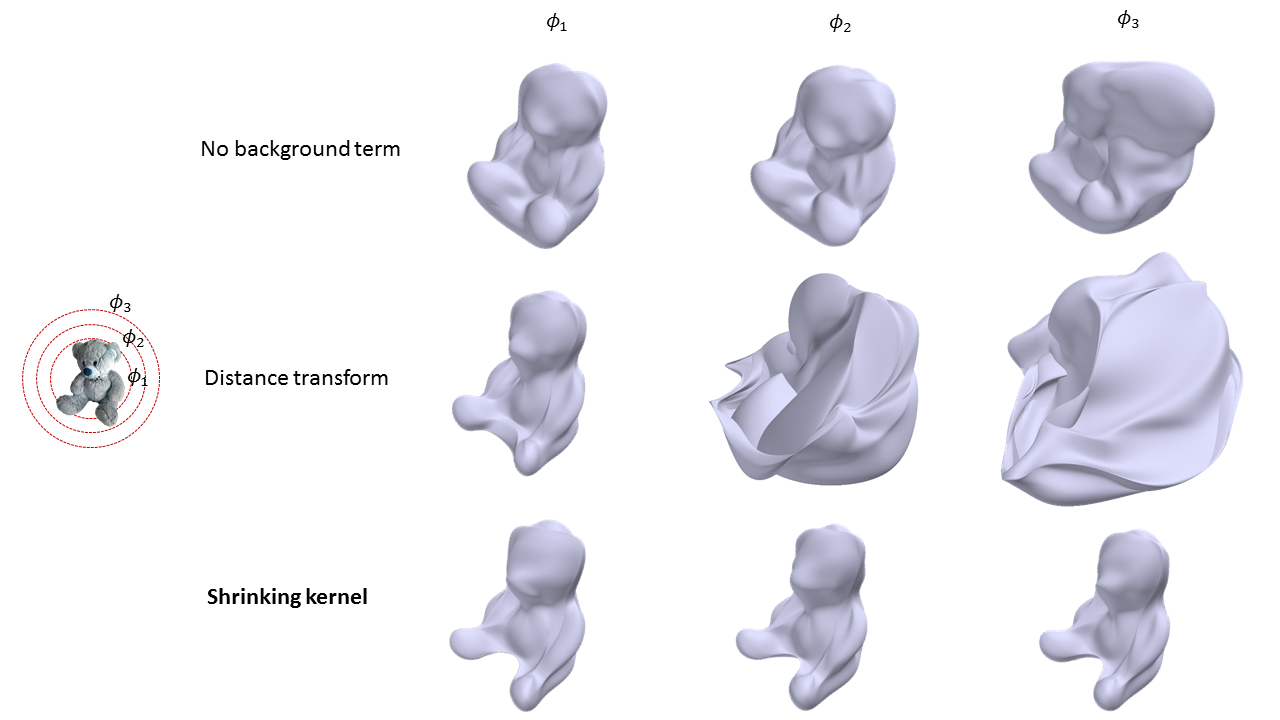

An Efficient Background Term for 3D Reconstruction and Tracking with Smooth Subdivision Surface Models , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017. ([video])

2015

[]

Model-Based Tracking at 300Hz using Raw Time-of-Flight Observations , In IEEE International Conference on Computer Vision (ICCV), 2015. ([video])

2008

[]

Modeling and Tracking Line-Constrained Mechanical Systems , In 2nd Workshop on Robot Vision (G. Sommer, R. Klette, eds.), volume 4931, 2008.

[]

Markerless Motion Capture of Man-Machine Interaction , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2008.

2007

[]

Scaled motion dynamics for markerless motion capture , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2007.

[]

Online smoothing for markerless motion capture , In Pattern Recognition (Proc. DAGM), Springer, 2007.

[]

Nonparametric density estimation with adaptive anisotropic kernels for human motion tracking , In Proc. 2nd International Workshop on Human Motion (A. Elgammal, B. Rosenhahn, R. Klette, eds.), Springer, volume 4814, 2007.

2006

[]

High accuracy optical flow serves 3-D pose tracking: exploiting contour and flow based constraints , In European Conference on Computer Vision (ECCV) (A. Leonardis, H. Bischof, A. Pinz, eds.), Springer, volume 3952, 2006.

[]

Nonparametric density estimation for human pose tracking , In Pattern Recognition (Proc. DAGM) (K. Fet al., ed.), Springer, volume 4174, 2006.

inproceedings

2007

[]

Region-based Pose Tracking , In Proc. 3rd Iberian Conference on Pattern Recognition and Image Analysis, Springer, 2007.

[]

Occlusion Modeling by Tracking Multiple Objects , In Pattern Recognition (Proc. DAGM), Springer, 2007.