Large-Scale Direct SLAM for Omnidirectional Cameras

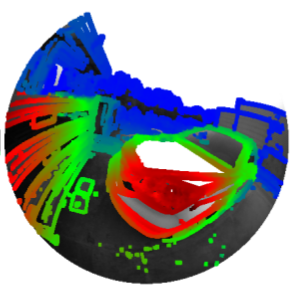

We propose a real-time, direct monocular SLAM method for omnidirectional or wide field-of-view fisheye cam- eras. Both tracking (direct image alignment) and mapping (pixel-wise distance filtering) are directly formulated for the unified omnidirectional model, which can model central imaging devices with a field of view well above 150° . This is in stark contrast to existing direct mono-SLAM approaches like DTAM or LSD-SLAM, which operate on rectified images, limiting the field of view to well below 180° . Not only does this allow to observe – and reconstruct – a larger portion of the surrounding environment, but it also makes the system more robust to degenerate (rotation-only) movement. The two main contribution are (1) the formulation of direct image alignment for the unified omnidirectional model, and (2) a fast yet accurate approach to incremental stereo directly on distorted images. We evaluated our framework on real-world sequences taken with a 185◦ fish-eye lens, and compare it to a rectified and a piecewise rectified approach.

Video

Dataset

- T1 (2:40min @ 50fps)

- Video Preview: [Pinhole-Rectified] [Omnidirectional]

- RosBag: [Pinhole-Rectified] [Omnidirectional]

- Calibration File: [Pinhole-Rectified] [Omnidirectional]

- T2 (1:19min @ 50fps)

- Video Preview: [Pinhole-Rectified] [Omnidirectional]

- RosBag: [Pinhole-Rectified] [Omnidirectional]

- Calibration File: [Pinhole-Rectified] [Omnidirectional]

- T3 (2:17min @ 50fps)

- Video Preview: [Pinhole-Rectified] [Omnidirectional]

- RosBag: [Pinhole-Rectified] [Omnidirectional]

- Calibration File: [Pinhole-Rectified] [Omnidirectional]

- T4 (2:08min @ 50fps)

- Video Preview: [Pinhole-Rectified] [Omnidirectional]

- RosBag: [Pinhole-Rectified] [Omnidirectional]

- Calibration File: [Pinhole-Rectified] [Omnidirectional]

- T5 (2:45min @ 50fps)

- Video Preview: [Pinhole-Rectified] [Omnidirectional]

- RosBag: [Pinhole-Rectified] [Omnidirectional]

- Calibration File: [Pinhole-Rectified] [Omnidirectional]

License

Unless stated otherwise, all data in the SLAM for Omnidirectional Cameras Dataset is licensed under a Creative Commons 4.0 Attribution License (CC BY 4.0).