Scene Flow Estimation

Scene flow is the dense or semi-dense 3D motion field of a scene that moves completely of partially with respect to a camera. The potential applications of scene flow are numerous. In robotics, it can be used for autonomous navigation and/or manipulation in dynamic environments where the motion of the surrounding objects needs to be predicted. Besides, it could complement and improve state-of-the-art Visual Odometry and SLAM algorithms which typically assume to work in rigid or quasi-rigid enviroments. On the other hand, it could be employed for human-robot or human-computer interaction, as well as for virtual and augmented reality.

Contact: Mariano Jaimez

Scene Flow with RGB-D cameras

PD-Flow: We have developed the first dense real-time scene flow for RGB-D cameras. Within a variational framework, photometric and geometric consistency is imposed between consecutive RGB-D frames, as well as TV regularization of the flow. Accounting for the depth data provided by RGB-D cameras, regularization of the flow field is imposed on the 3D surface (or set of surfaces) of the observed scene instead of on the image plane, leading to more geometrically consistent results. The minimization problem is efficiently solved by a primal-dual algorithm which is implemented on a GPU. This work was developed in conjuction with the MAPIR group (University of Málaga). Code available here (Github): https://github.com/MarianoJT88/PD-Flow.

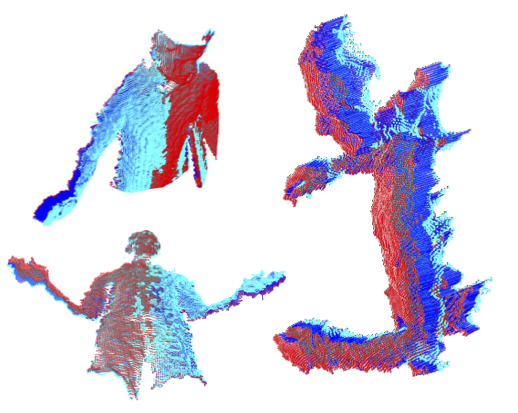

MC-Flow: We have proposed a novel joint registration and segmentation approach to estimate scene flow from RGB-D images. Instead of assuming the scene to be composed of a number of independent rigidly-moving parts, we use non-binary labels to capture non-rigid deformations at transitions between the rigid parts of the scene. Thus, the velocity of any point can be computed as a linear combination (interpolation) of the estimated rigid motions, which provides better results than traditional sharp piecewise segmentations. Within a variational framework, the smooth segments of the scene and their corresponding rigid velocities are alternately refined until convergence. We use a weighted quadratic regularization term to favor smooth non-binary labels, but also test our algorithm with weighted TV. This work was developed in conjuction with the MAPIR group (University of Málaga).

Scene Flow with Neural Networks

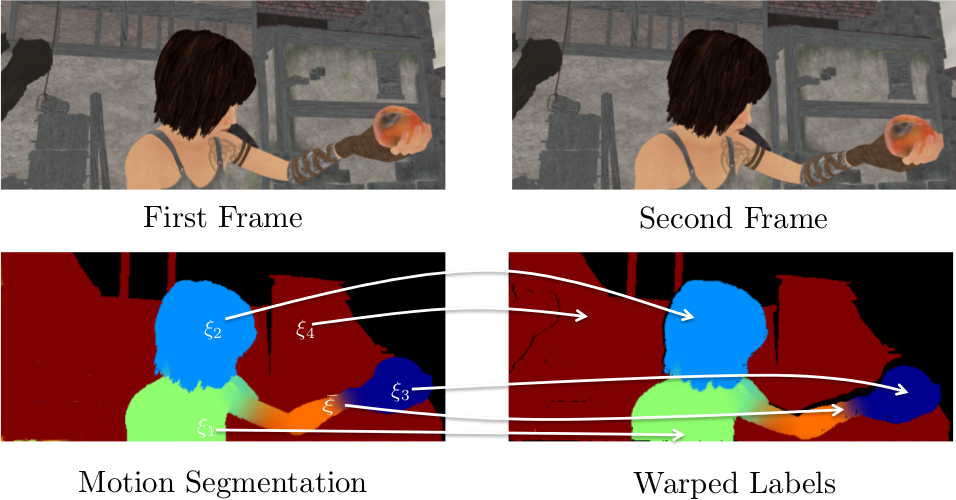

Recent work has shown that optical flow estimation can be formulated as a supervised learning task and can be successfully solved with convolutional networks. Training of the so-called FlowNet was enabled by a large synthetically generated dataset. We extended the concept of optical flow estimation via convolutional networks to disparity and scene flow estimation. To this end, we propose three synthetic stereo video datasets with sufficient realism, variation, and size to successfully train large networks. Our datasets are the first large-scale datasets to enable training and evaluation of scene flow methods. Besides the datasets, we present a convolutional network for real-time disparity estimation that provides state-of-the-art results. By combining a flow and disparity estimation network and training it jointly, we demonstrate the first scene flow estimation with a convolutional network.

Contact: Philip Haeusser

Scene Flow with Stereo cameras

We developed variational algorithms to estimate dense depth and 3D motion fields from stereo video sequences. Our work was accepted as an oral presentation at the European Conference on Computer Vision 2008. The journal version appeared in the Int. J. of Computer Vision 2011. Moreover, a Springer book on Scene Flow appeared in 2011 - see below for additional work in this area.

In collaboration with Daimler Research, we deployed these methods for driver assistance and visual scene analysis. For these efforts our coauthors from Daimler Research were nominated for the Deuscher Zukunftspreis 2011 and received the Karl-Heinz-Beckurts-Preis 2012.

Related publications

Export as PDF, XML, TEX or BIB

Books

2011

[]

Stereoscopic Scene Flow for 3D Motion Analysis , Springer, 2011.

Journal Articles

2011

[]

Stereoscopic Scene Flow Computation for 3D Motion Understanding , In International Journal of Computer Vision, volume 95, 2011.

Conference and Workshop Papers

2017

[]

Multiframe Scene Flow with Piecewise Rigid Motion , In International Conference on 3D Vision (3DV), 2017. ([slides] [poster] [supplementary])

Spotlight Presentation []

Fast Odometry and Scene Flow from RGB-D Cameras based on Geometric Clustering , In Proc. of the IEEE Int. Conf. on Robotics and Automation (ICRA), 2017. ([video])

2015

[]

Motion Cooperation: Smooth Piece-Wise Rigid Scene Flow from RGB-D Images , In Proc. of the Int. Conference on 3D Vision (3DV), 2015. ([video])

[]

A Primal-Dual Framework for Real-Time Dense RGB-D Scene Flow , In Proc. of the IEEE Int. Conf. on Robotics and Automation (ICRA), 2015. ([video])

2009

[]

Detection and Segmentation of Independently Moving Objects from Dense Scene Flow , In Energy Minimization Methods in Computer Vision and Pattern Recognition (EMMCVPR) (D. Cremers, Y. Boykov, A. Blake, F. R. Schmidt, eds.), volume 5681, 2009.

2008

[]

Efficient Dense Scene Flow from Sparse or Dense Stereo Data , In European Conference on Computer Vision (ECCV), 2008.