This is an old revision of the document!

Deep Learning

Contact: Dr. Laura Leal-Taixe, Vladimir Golkov, Tim Meinhardt, Qunjie Zhou, Patrick Dendorfer

Deep Learning is a powerful machine learning tool that showed outstanding performance in many fields. One of the greatest successes of Deep Learning has been achieved in large scale object recognition with Convolutional Neural Networks (CNNs). CNNs' main power comes from learning data representations directly from data in a hierarchical layer based structure.

We apply Convolutional Neural Networks in order to solve computer vision tasks such as optical flow, scene understanding, and develop state-of-the-art methods.

Learning by Association

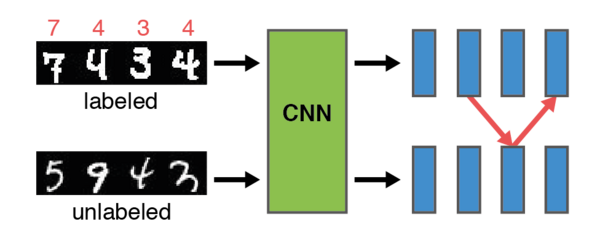

However, these networks have been trained dramatically differently than a learning child, requiring labels for every training example, following a purely supervised training scheme. Neural networks are defined by huge amounts of parameters to be optimized. Therefore, a plethora of labeled training data is required, which might be costly and time consuming to obtain. It is desirable to train machine learning models without labels (unsupervisedly) or with only some fraction of the data labeled (semi-supervisedly).

We propose a novel training method that follows an intuitive approach: learning by association. We feed a batch of labeled and a batch of unlabeled data through a network, producing embeddings for both batches. Then, an imaginary walker is sent from samples in the labeled batch to samples in the unlabeled batch. The transition follows a probability distribution obtained from the similarity of the respective embeddings which we refer to as an association.

In this line of work, we have published papers on semi-supervised training, domain adaptation, multimodal training (text and images) and unsupervised training / clustering. More information can be found here.

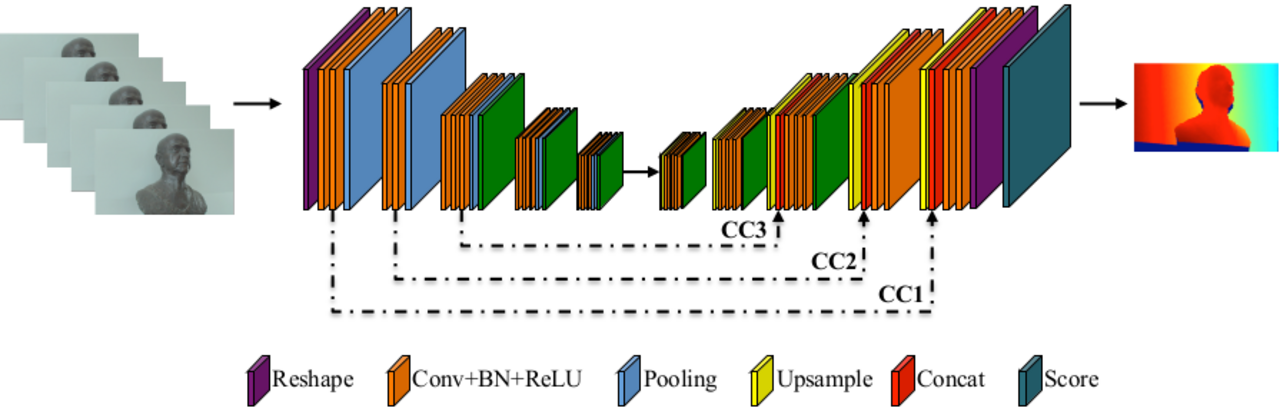

Deep Depth From Focus

Flownet

In our recent ICCV'15 paper, we presented two CNN architectures to estimate the optical flow given one image pair. We train the network end-to-end on a GPU. Our system works as good as state-of-the-art techniques.

Unsupervised Domain Adaptation for Vehicle Control

Even though end-to-end supervised learning has shown promising results for sensorimotor control of self-driving cars, its performance is greatly affected by the weather conditions under which it was trained, showing poor generalization to unseen conditions. Therefore, we show how knowledge can be transferred using semantic maps to new weather conditions without the need to obtain new ground truth data. To this end, we propose to divide the task of vehicle control into two independent modules: a control module which is only trained on one weather condition for which labeled steering data is available, and a perception module which is used as an interface between new weather conditions and the fixed control module. To generate the semantic data needed to train the perception module, we propose to use a generative adversarial network (GAN)-based model to retrieve the semantic information for the new conditions in an unsupervised manner. We introduce a master-servant architecture, where the master model (semantic labels available) trains the servant model (semantic labels not available)